In this third blog of our multi-part series on load testing video streaming at scale, we evaluate different Media Processing Functions (MPFs) in different parts of the network. We focus on achieving the best results when streaming linear Live channels.

In the first part of this series, we introduced the testbed used for our evaluation, which is based on MPEG’s Network Based Media Processing (NBMP). Also, in the first part we introduced the term Distributed Media Processing Workflows (DMPWs), which consist of multiple MPFs running at different geographical points in the Network. These distributed MPFs can provide performance gains in a DMPW by being closer to the end client devices. In the second part of the blog series, we evaluated different Video on Demand (VOD) setups that rely on remote object-based cloud storage to obtain its best performance gains.

Through the various tests presented in this blog we will determine:

The best cloud instance type for a specific Live video streaming configuration.

The linear scalability of Unified Origin in Live video streaming use cases.

The use of an Origin shield cache in front of Unified Origin.

The best configuration of edge based processing at nodes with close proximity to the user.

Testbed setup

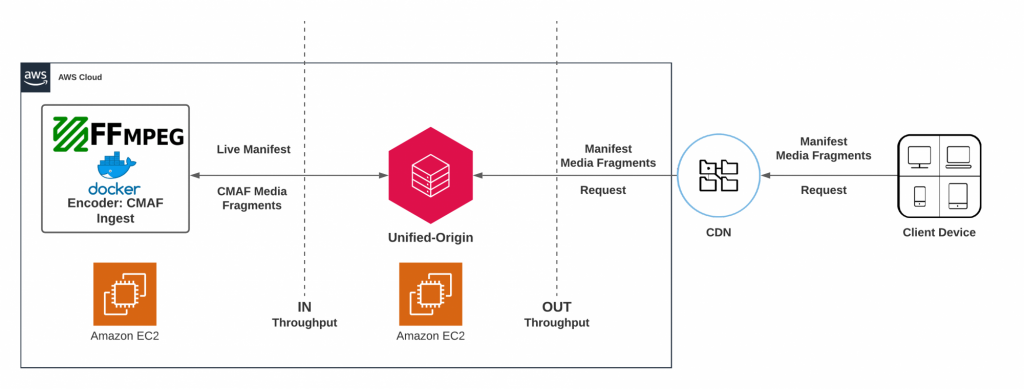

Most popular setups for Live video streaming of Over-the-top media services (OTT) and TV Broadcaster consists of four elements: (1) Media Source (e.g., Live Encoder); (2) Origin server acting as the Media Processing Function; (3) a Content Delivery Network (CDN); and (4) client devices (Figure 1). This type of setup can use one or multiple CDNs to improve media delivery towards the client devices by caching the most watched (hot) content and reducing latency towards the client device. The reduction in latency happens by being geographically closer to the client device.

In this blog we focus on the media delivery between the media origination and the CDN. Therefore, for evaluation purposes, we removed the CDN and client devices and replaced them with a Workload generator that generates realistic scenarios of Live video streaming cases shown in Figure 2.

Setting up a publishing point for Live

The following code snippet is an example of how to create a Server Manifest (.isml). It uses mp4split (Unified Packager) to create a Live publishing point.

#!/bin/bash

set -e

mkdir -p /var/www/origin/live/test

mp4split --license-key=/etc/usp-license.key -o test.isml \

--archiving=1 \

--archive_length=3600 \

--archive_segment_length=1800 \

--dvr_window_length=30 \

--restart_on_encoder_reconnect \

--mpd.min_buffer_time=48/25 \

--mpd.suggested_presentation_delay=48/25 \

--hls.minimum_fragment_length=48/25 \

--mpd.minimum_fragment_length=48/25 \

--mpd.segment_template=time \

--hls.client_manifest_version=4 \

--hls.fmp4

mv test.isml /var/www/origin/live/test/

Media source (Live encoder)

For this Live video streaming use case, we used FFmpeg open-source software to emulate ingest CMAF tracks towards Unified Origin. Specifically, we used the DASH-IF Live Media Ingest Protocol - Interface 1.

A demonstration of a Live Origin demo using DASH-IF Live Media Ingest Protocol can be found in our Github repository.

In this case, the Live Media Source contains eight videos and two audio CMAF tracks generated by FFmpeg. The Docker container sends all media tracks to Unified Origin through a HTTP POST method. This process is described in more detail in DASH-IF Live Media Ingest Protocol document. Table 1 presents the media specifications of the emulated CMAF tracks.

| Track | Resolution | Bitrate (kbps) | Codec |

| video‑1.cmfv | 256x144 | 100 | libx264 |

| video‑2.cmfv | 384x216 | 150 | libx264 |

| video‑3.cmfv | 512x288 | 300 | libx264 |

| video‑4.cmfv | 640x360 | 500 | libx264 |

| video‑5.cmfv | 768x432 | 1000 | libx264 |

| video‑6.cmfv | 1280x720 | 1500 | libx264 |

| video‑7.cmfv | 1280x720 | 2500 | libx264 |

| video‑8.cmfv | 1920x1080 | 3500 | libx264 |

| audio‑1.cmfa | NA | 64 | aac |

| audio‑2.cmfa | NA | 128 | aac |

Table 1. CMAF tracks’ bitrate ladder generated by FFmpeg.

Below we present an example of a Docker compose file which uses FFmpeg to encode eight videos and two audio CMAF tracks, and then it sends the media to Unified Origin’s publishing point URI.

# docker-compose.yml

version: "2.1"

services:

ffmpeg-1:

image: ffmpeg:latest

environment:

- PUB_POINT_URI=http://${YOUR_ORIGIN_HOSTNAME}/test/test.isml

- 'TRACKS={

"video": [

{ "width": 256, "height": 144, "bitrate": "100k", "codec": "libx264", "framerate": 25, "gop": 48, "timescale": 25 },

{ "width": 384, "height": 216, "bitrate": "150k", "codec": "libx264", "framerate": 25, "gop": 48, "timescale": 25 },

{ "width": 512, "height": 288, "bitrate": "300k", "codec": "libx264", "framerate": 25, "gop": 48, "timescale": 25 },

{ "width": 640, "height": 360, "bitrate": "500k", "codec": "libx264", "framerate": 25, "gop": 48, "timescale": 25 },

{ "width": 768, "height": 432, "bitrate": "1000k", "codec": "libx264", "framerate": 25, "gop": 48, "timescale": 25 },

{ "width": 1280, "height": 720, "bitrate": "1500k", "codec": "libx264", "framerate": 25, "gop": 48, "timescale": 25 },

{ "width": 1280, "height": 720, "bitrate": "2500k", "codec": "libx264", "framerate": 25, "gop": 48, "timescale": 25 },

{ "width": 1920, "height": 1080, "bitrate": "3500k", "codec": "libx264", "framerate": 25, "gop": 48, "timescale": 25 }

],

"audio": [

{ "samplerate": 48000, "bitrate": "64k", "codec": "aac", "language": "eng", "timescale": 48000, "frag_duration_micros": 1920000},

{ "samplerate": 48000, "bitrate": "128k", "codec": "aac", "language": "eng", "timescale": 48000, "frag_duration_micros": 1920000 } ] }'

- MODE=CMAF

depends_on:

live-origin:

condition: service_healthy

Origin configuration

We modified the IPv4 local port range on the Linux server running Apache web server to minimize the probability of TCP ports exhaustion.

#!/bin/bash # The configuration file is located in '/proc/sys/net/ipv4/ip_local_port_range' sudo sysctl -w net.ipv4.ip_local_port_range="2000 65535" # from 32768 60999

We used the following Apache web server configuration to enable Unified Origin module with one single web server virtual host. Also, we set the DocumentRoot to the full path of our Server Manifest file (.isml).

LoadModule smooth_streaming_module /usr/lib/apache2/modules/mod_smooth_streaming.so

UspLicenseKey /etc/usp-license.key

Listen 0.0.0.0:80

<VirtualHost *:80>

ServerAdmin info@unified-streaming.com

ServerName origin-live

DocumentRoot /var/www/origin/live

<Directory />

Require all granted

Satisfy Any

</Directory>

AddHandler smooth-streaming.extensions .ism .isml .mp4

<Location />

UspHandleIsm on

UspHandleF4f on

</Location>

Options -Indexes

# If not specified, the global error log is used

LogFormat "%{%FT%T}t.%{usec_frac}t%{%z}t %v:%p %h:%{remote}p \"%r\" %>s %I %O %{us}T" precise

LogFormat "%h %l %u %t \"%r\" %>s %b \"%{Referer}i\" \"%{User-agent}i\"" netdata

ErrorLog /var/log/apache2/evaluation.unified-streaming.com-error.log

CustomLog /var/log/apache2/evaluation.unified-streaming.com-access.log precise

CustomLog /var/log/apache2/netdata_access.log netdata

LogLevel warn

HostnameLookups Off

UseCanonicalName On

ServerSignature On

LimitRequestBody 0

Header always set Access-Control-Allow-Headers "origin, range"

Header always set Access-Control-Allow-Methods "GET, HEAD, OPTIONS"

Header always set Access-Control-Allow-Origin "*"

</VirtualHost>

Workload Generator

The Media Sink (or Workload Generator) generates a load with a gradual increase of emulated workers from zero up to 50. Each worker emulates MPEG-DASH media HTTP(s) requests as follows:

- Request MPD

- Parse the MPD and metadata

- URL segment generation

- Set the last available media segment with temporary index ‘k’ = 0

- Request audio segment ‘k’ with a bitrate of 128kbps

- Request video segment ‘k’ with a bitrate of 3500kbps

- If ‘k’ == total number of available media segments ‘N’ go to step 1, else ‘k’ + 1 and go to step 5

Type of cloud instance for Live video streaming

In previous Part 2 of our blog series we focused on using the best conditions for VOD streaming use cases with remote storage access. Because we did not have to store high amounts of data such as media, we selected the compute optimized instance family that suited the best for that use case.

However, most common Live video streaming scenarios require storing the media that is ingested by the encoder, which can later be available as a VOD asset in an ISOBMFF format (e.g., CMAF, fragmented MP4).

To identify the best cloud instance for Live video streaming, we classified the publishing point configuration of a Live channel into three use cases:

- A: Pure Live,

- B: Archiving with no DVR window

- C: Archiving with an enabled DVR window

Table 2 provides the main configuration parameters to create a live publishing point. To know more details about each parameter please refer to our documentation or our Live Best Practices.

| Use case/configuration | Pure Live (A) | Archiving, no ‘DVR’ (B) | Archiving and ‘DVR’ ( C ) |

| archiving | true | true | true |

| archive_length | 120 | 86400 (24h) | 86400 (24h) |

| archive_segment_length | 60 | 600 (10m) | 600 (10m) |

| dvr_window_length | 30 | 30 | 3600 (1h) |

Table 2. Configuration of Live publishing point of most popular Live video streaming scenarios.

The following script shows an example on how we created a Live publishing point for each use case. We replaced each parameter’s value according to table 2.

#!/bin/bash

set -e

mkdir -p /var/www/origin/live/test

mp4split --license-key=/etc/usp-license.key -o test.isml \

--archiving=${YOUR_VALUE} \

--archive_length=${YOUR_VALUE} \

--archive_segment_length=${YOUR_VALUE} \

--dvr_window_length=${YOUR_VALUE} \

--restart_on_encoder_reconnect \

--mpd.min_buffer_time=48/25 \

--mpd.suggested_presentation_delay=48/25 \

--hls.minimum_fragment_length=48/25 \

--mpd.minimum_fragment_length=48/25 \

--mpd.segment_template=time \

--hls.client_manifest_version=4 \

--hls.fmp4

mv test.isml /var/www/origin/live/test/

Table 3 presents the specifications of three tested cloud instances. We selected at least one instance from the following instance families: memory, storage, and compute optimized. We also considered the baseline bandwidth of each cloud instance which guarantees a network bandwidth performance after 60 minutes of stress usage. For more information please refer to AWS EC2 network performance documentation.

| Instance type | Instance | # vCPU | # Phys. Cores | Memory (GiB) | Storage | # of EC2 IOPS | Baseline Bandwidth (Gbps) | $/hr |

|---|---|---|---|---|---|---|---|---|

| Memory optimized | r5n.large | 2 | 1 | 16 | EBS Only | 100 | 2.1 | 0.178 |

| Storage optimized | i3en.large | 2 | 1 | 16 | 1x1250 MB NVMe SSD | 32,500 | 2.1 | 0.27 |

| Compute optimized | c5n.large | 2 | 1 | 5.25 | EBS Only | 100 | 3 | 0.123 |

Table 3. Type of AWS EC2 instances tested. Details provided by AWS EC2 for Frankfurt region datacenter. Checked on the 10th of November 2021.

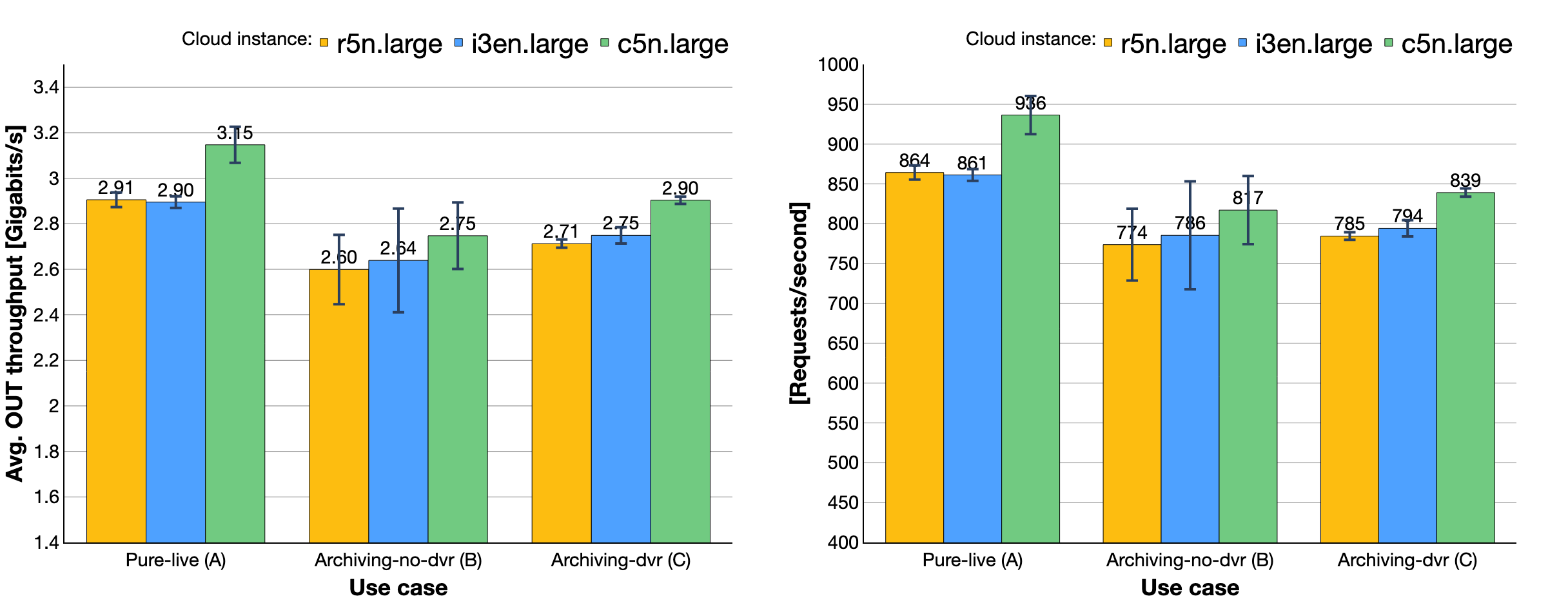

Experiment results of Live use cases

The following section shows the results of stress testing Unified Origin acting as de MPF in our testbed. We used three types of Live video streaming configurations (Table 2) and the three chosen cloud instances (Table 3). For each of the tests, the workload generator created a load hitting Unified Origin directly with a duration of one hour. In this section, we present the throughput and memory consumption by the cloud instance running Unified Origin.

Figures 4 and 5 present the average output throughput and average requests per second delivered to the Workload generator, respectively. The experiments using the cloud instance c5n.large obtained the highest throughput and requests per second among all three use cases. This result correlates to the baseline bandwidth provided by AWS EC2’s Server Level Agreements (SLA).

To obtain the best performance of Unified Origin, choose cloud instances based on the baseline bandwidth instead of burst bandwidth (see AWS EC2) .

In the previous figures 4 and 5 we noticed that both metrics had a higher variance for use case B in comparison to use cases A and C. This happens for two reasons: 1) there is no DVR window in use case B in comparison to C, therefore, the Workload generator will request the MPEG-DASH MPD more often, and 2) Unified Origin is creating more read/write operations by saving to disk the ingested media. The combination of these two factors and the type of load, makes the MPD requests in use case B more compute expensive.

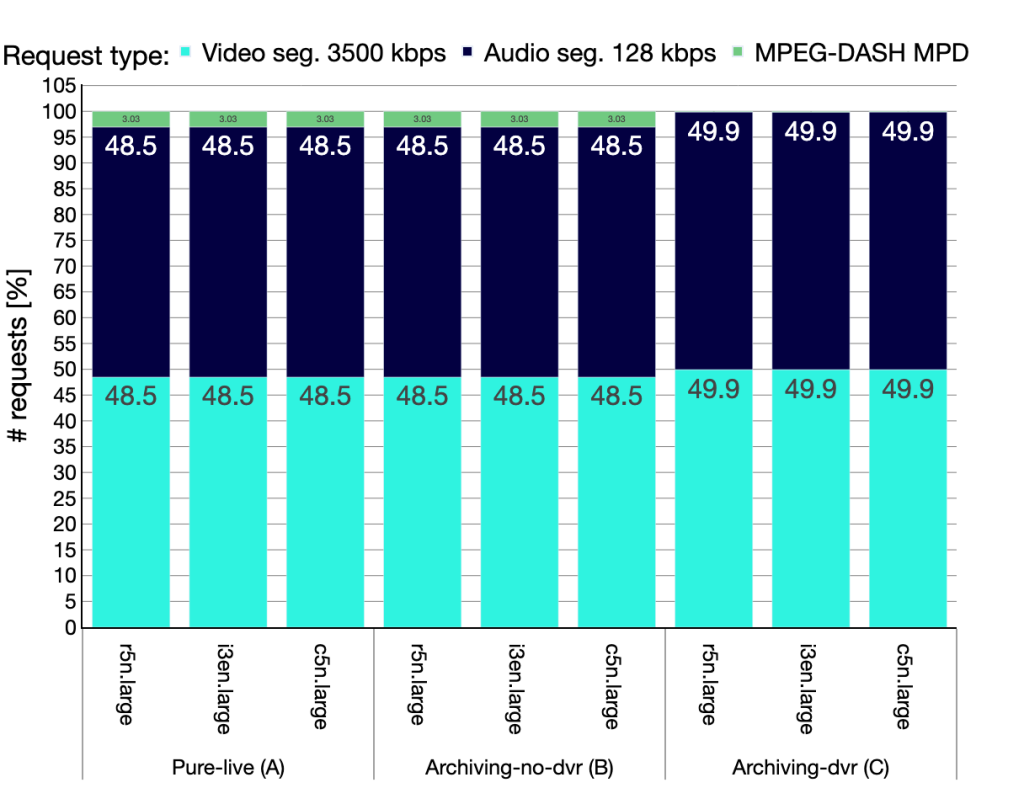

Figure 6 shows the proportion (percentage) of a total number of requests generated by the Workload generator in each experiment. We can observe that use case A and B had an average of 3.03% requests for the MPD in comparison to video and audio segments requests with 48.5% each.

Select the compute optimized instances for Pure live scenarios and storage optimized instances when archiving is enabled.

Experiment results of multiple Live channels

For the following experiments, we selected the Archiving-dvr ( C ) use case and the cloud instance i3en.large which offers a total of 16GiB of RAM memory. Each encoder is posting the same media bitrate ladder to a separate publishing point. It is important to notice that in these experiments we did not use an Origin shield cache in front of Unified Origin and configuration ( C ) increases the media archive size on disk over time.

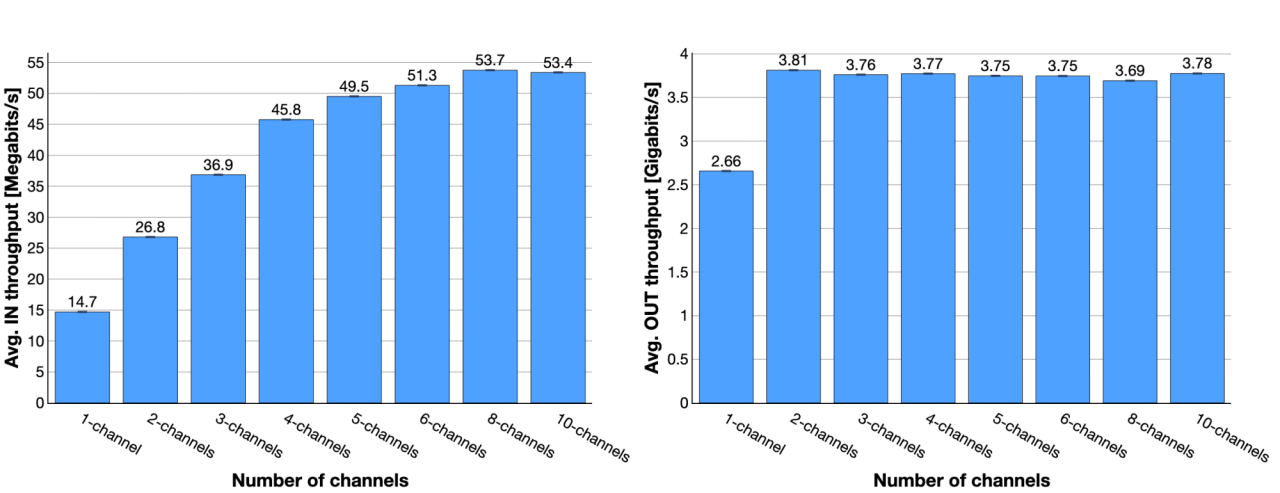

Figure 7 presents the average received (IN) throughput by the encoder(s) and figure 8 shows the average outgoing (OUT) throughput towards the Workload emulator. For each experiment, we increased the number of unique Live channels at Unified Origin.

The results show a constant output throughput by Unified Origin regardless of the number of unique Live channels. In these tests conditions Unified Origin pushed an average of 3.8 Gigabits per second of throughput towards the Workload generator.

We highly recommend setting up an Origin Shield cache in front to reduce the load and memory consumption at Unified Origin (docs)

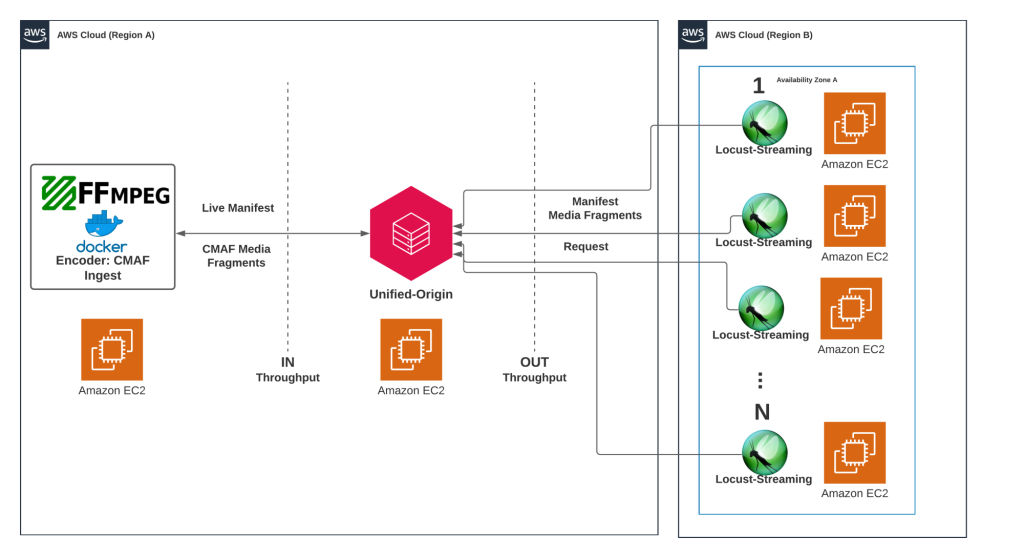

Distributed media processing

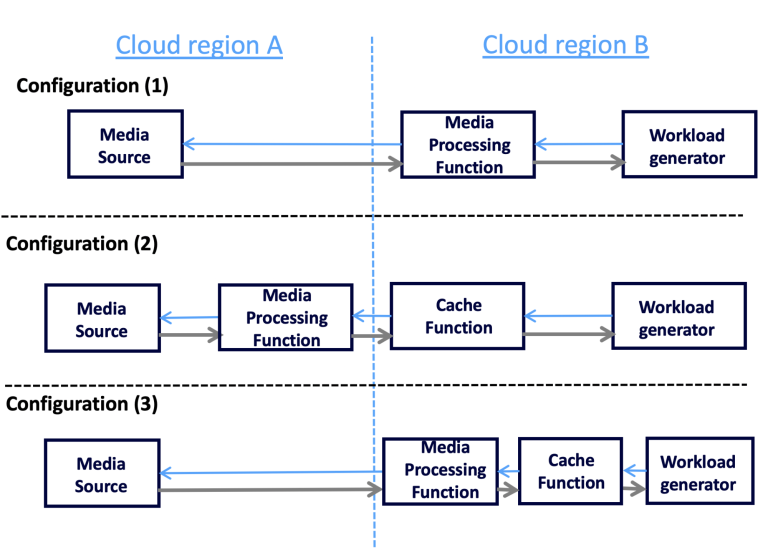

In this section we consider edge based processing at nodes at close proximity to the user. Such deployments may run on multiple clouds and edge clouds at different geographical locations. The Live media source in our setup is always a remote source sitting in a central cloud (A) (in this case we use the Amazon EC2 region in Ireland). We deployed the Media Sink (or Workload Generator) in Frankfurt EC2 cloud (B) region. To test the edge based processing we deployed three configurations shown in figure 9.

Configuration 2 would correspond to typical usage with a content delivery network cache, while configurations 1 and 3 are smart edge configurations where active processing happens by the media function. The cache function was based on Nginx server. We deployed each edge configuration with the following cloud instance types shown in table 4.

| Server name | Cloud instance type | Baseline Bandwidth (Gbps) |

| Media Source (ingest-server) | c5n.2xlarge | 10 |

| Media Processing Function (origin-server) | c5n.large | 3 |

| Cache Function (cache-server) | c5n.large | 3 |

| Workload generator | 6 * c5n.4xlarge | 6 * 15 |

Table 4. Type of AWS EC2 instances tested. Details provided by AWS EC2 for Frankfurt region datacenter. Checked on the 10th of November 2021.

Experiment results for distributed media processing

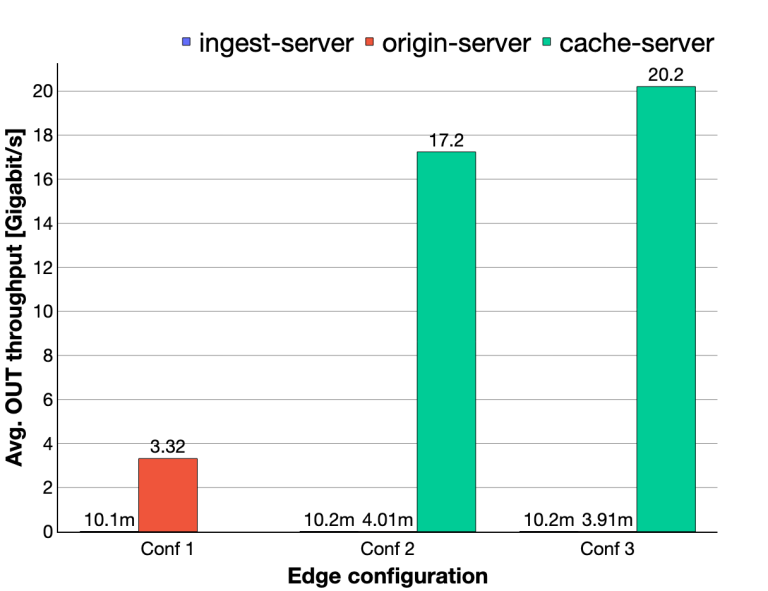

The following section presents the experiment results of distributed media processing. Figure 10 presents the average output throughput from each server: ingest-server, orign-server, and cache-server.

Figure 10 shows an average output throughput of 10.2 Mbps for each ingest-server in all three configurations. The origin-server obtained an average output throughput of 4.01 Mbps and 3.91 Mbps for configurations 2 and 3, respectively. After adding a Cache function in configurations 2 and 3, we reduced the load towards Unified Origin by 99.87% (from 3.32Gbps to 4.01 Mbps).

The results show that without a cache (Conf. 1) the performance is not improved, as the media processing function is not shielded by a cache. The CDN-like edge configuration 2 performs better in this case, as the processing is centralized and the caching, as the processing is centralized and the caching happens at the edge which is stable. However, edge configuration 3 with both caching and media processing functions achieves even better performance with an average of 20.2 Gbps of throughput and a lower variance in response times.

Conclusion

In this third part of our series on load testing streaming video at scale we tested various use cases for Live video streaming.

Based on our experiments we provide some key takeaways:

We presented the use of CMAF ingest live demo for the emulation of one or multiple encoders using FFmpeg.

Based on AWS EC2 network bandwidth specifications, always select a cloud instance by its baseline bandwidth instead of the burst bandwidth. In case your cloud instance reaches the indicated burst bandwidth, a bandwidth throttling can occur reducing the performance of Unified Origin.

When selecting a cloud instance for Live video streaming, verify the type of configuration. Compute optimized instances are more suitable for Pure-live cases, and storage optimized instances when you require to archive the media posted by the encoder.

Based on the total sum of your encoder’s bitrate ladder, you can estimate the required RAM memory and the maximum number of Live channels that your cloud instance can handle.

We strongly recommend using an Origin shield cache to reduce the load and memory consumption at Unified Origin.

Selecting cloud instances that fit your media workload and the type of scaling (vertical or horizontal) will reduce your overall costs.

If you want further information about how we built a testbed based on MPEG’s NBMP standard, we encourage you to read our paper presented at MMsys2021.